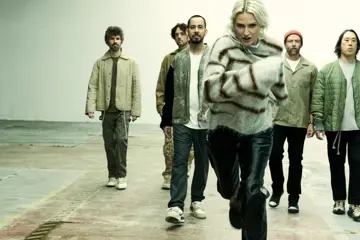

Holly Herndon

Holly HerndonIn a matter of months, AI has become the hot topic on everyone's lips, but in the arts scene, AI and the issues that come with the growing technological advancement have been around for years. Whether it’s a visual artist’s art being stolen and repurposed for profit that the artist can’t access or writers like myself worried they’ll soon be losing out to a million more ChatGPT models stealing bylines, the growing AI debate seems to be getting more complex and more unruly. But what about music?

Programs like Amper and Boomy litter the market, providing users with basic AI platforms that create an, albeit objectively bad, song that users can use according to the specific companies' lengthy terms & conditions. Google has even dipped a finger into the theoretical pie by creating Music LM. An AI mechanism that makes music based on the user's text input. It’s the first of its kind but has yet to be released due to copyright issues.

All of these programs learned by using specific data, aka songs, to produce their own AI-generated music, but whose music did they learn off? Could it be yours? If so, can you opt-out? Or, at the very least, be paid for your contribution to AI learning? And possibly the most important question: what does the future look like for musical artists with the continued advancement of AI technology?

Let’s take these questions one at a time.

How Do You Know If Your Songs Are Being Used To Train AI?

It’s one of the most common questions concerning the Music AI debate, but currently, there are very few ways to find out if your songs are being used to train AI without being explicitly told by a company that your songs were included in the training data.

Don't miss a beat with our FREE daily newsletter

Visual artists now have a program called haveibeentrained.com, created by multi-disciplinary artist Holly Herndon, to determine whether their art has been used to train AI. Additionally, the site has the option to opt in or out of AI training for participating companies.

There is no such tool with music, although Herndon has posted on her Twitter account noting that services like haveibeentrained.com will need to become available for music within the next few years to combat the growing technology.

Is It Really As Bad As It Seems?

Chatting to Professor Rebecca Giblin, Director of the Intellectual Property Research Institute of Australia (IPRIA) and an expert in copyright law surrounding creative industries, she argues that it might not be the fact that your music was used to train AI but rather the circumstances surrounding it.

“If we zoom out to a far enough degree of abstraction, in some sense, we as humans are all being trained on someone else's creativity our whole lives. We are exposed to music and art, and nobody goes off and creates anything in a vacuum; otherwise, it would be terrible. Imagine music that was made by someone who had never heard a song before," She explains.

“When these technologies are being trained on everything, that raises different issues to when they’re being trained on the creativity of a single artist to generate something that then sounds like that artist.

“There is a whole spectrum, I think. There is no clear answer that says, yes, this is ok, and no, this is not ok. We’re going to see artists create new kinds of music from AI - and we’re only just starting to understand what that might look like. But of course, in other ways, AI has the potential to hugely impact entertainers' economic rights, copyrights, and their moral rights.”

What Can You Do About It?

Say you believe an AI has exclusively trained using your work and is now producing songs that sound like you.

Unfortunately, following Australia's current law, there is not a whole lot you can do. A musician's individual published works are protected by copyright law, but their overall style is not.

To claim copyright infringement, an artist must prove that their song was copied into the system and prove the algorithm was trained on the song or artist it allegedly infringes on. It’s not easy to reverse-engineer a neural network and see what songs it was fed.

Additionally, while there are plenty of examples of lawsuits where musicians were sued by other musicians for copyright infringements, a company could say its AI is a trade secret. Artists would have to fight in court to discover how the program works, which most artists cannot afford due to a lack of resources.

Delving into the legal conundrum of AI becomes more complex and confusing the further you go, and this mainly comes down to the fact that this is the first time we are dealing with a component that is not human but essentially acts like a human.

Do we give technology the same rights and responsibilities as humans? Allowing AI to produce music so that it can be trialled as a human, or is there danger in awarding those rights to a machine? Thus, allowing AI the power to create music that is seen, legally, on par with music made by a human artist.

We are technically writing the rules as we go along, which is why so many issues arise.

However, as the AI debate heats up, laws are being reformed. Currently, the Australian government is looking to review its copyright infringement laws. While this doesn’t quite touch the sides of the whole issue, this review allows Australian artists to voice their worries, opinions and suggestions that could amount to change that benefits the creator.

You can submit your thoughts here until March 7.

Will Artists Become Obsolete?

A larger question for many people in the industry is the impending fear that artists will one day become obsolete due to the advancements of AI. Luckily, Professor Giblin doesn’t think that will happen anytime soon.

“Very few people need to be worried that they are going to be losing out to AI-generated music.” She says.

“Now, there are some exceptions. If you’re creating really unchallenging music - elevator-type music - that's a job that can be taken by AI. If you’re creating things that are challenging and complex, that aim to make people feel something, I think that without a very strong degree of human contribution, we are very far from AI being able to do that.

“It’s very similar to what we're seeing with [image creator] - there is a certain kind of soulless stock image that makes me feel kind of sad inside - that’s the kind of thing that can, and will shortly, be replaced with AI.

“You need a human contribution for art that makes you feel something.”

When listening to AI-generated music, it’s rare to get the feeling of wonderment that you might feel when listening to an original piece of music made by a human. A vocal take that might feel almost spiritual, or the uniqueness of a guitar riff that makes you feel an immense sense of joy. Al is yet to create something that matches the natural creativity of a human and is many years away from creating anything that can even come close to that.

Holly Herndon, the creator of haveibeentrained.com and AI positivist, recently completed a Ted Talk where she demonstrated her AI software Holly+, a custom voice instrument that allows anyone to upload polyphonic audio and receive a download of that music sung back in Herndon’s distinctive voice.

When demonstrating, she invites fellow musician and friend PHER to join the stage and sing into two separate microphones. One patched through Hollly+, and the other just amplified PHER’s natural voice.

Instantly, you can hear the natural ticks and imperfections in PHER’s voice, making it much more listenable than Holly’s generated one. While the idea of imperfection as perfection might sound counterproductive, the AI model featured a perfectness that was ultimately unnatural and eerie compared to PHER’s natural timbre.

Nonetheless, the issues with Holly+ do not take away from the technological feat that Herndon has managed to pull off - but does that transfer over to a musical feat? It would depend on the listener.

Holly Herndon, along with others, has embraced the advancement of AI within the arts rather than shying away. Creating Holly+ along with haveibeentrained.com, she’s breaking down a barrier and attempting to morph the two worlds of tech together on artists' terms.

In an interview with Art In America on the creation of her platform, Herndon explicitly states, “Well, AI is just us. AI is human labour obfuscated through a terminology called AI, and our goal is to use technology to allow us to be more human together.

In this quote, Herndon raises an important point: AI is essentially human labour, and if transparency is achieved, it can be used to help rather than hinder the art scene.

Additionally, Professor Giblin added to this thought process, claiming that “it’s important that we don’t have a kneejerk response to this [AI] by saying ‘oh we need to fix this by making it illegal to play with.’ The collateral damage that could come with that could be problematic as well

“We have no idea how much further this can be taken because we’re really at the frontier.”

Regarding ethical questions outside legal frameworks, the debate for artists essentially comes down to consent. As the law evolves to suit the technological age, we will see a new wave of artists positively interacting with AI and willingly lending their music to AI to learn. This is already happening but in the small minority.

Possibly, the solution doesn’t lie in a fear-based response of sticking your head in the sand or banning the technology altogether—instead, a constant stream of discourse and cooperation between technology, humans and companies that encourages transparency. At the end of the day, artists need clarity so that they can negotiate how they’d like to proceed, whether that’s removing themselves from the data or asking for remuneration or credit.

Keeping the artist in the equation should always be the solution, but copyright laws and AI-generated programs currently don’t allow for this. Instead, artists are being shut out of the conversation at a time when artists' contribution is needed most. Thinking of AI and artists in a symbiotic relationship might be the step we need to integrate AI safely into the arts while still protecting the livelihoods of music makers.